The scientific method is fairly straightforward. You formulate an hypothesis to explain some observations. You determine a method of testing your hypothesis. You test it and either verify it or discard it.

As time passes, hypotheses which have formerly been in favour are found to be at odds with some observations and are either modified or discarded and replaced by new ideas.

Scientists are human and have human frailties. As a result, sometimes observations warrant discarding a heavily favoured hypothesis in favour of some other, but the expected does not happen and the scientific community hangs on to the older idea.

This is much more likely to occur if the older idea is part of a larger interrelated series of ideas which we might call a paradigm. The last significant paradigm shift that occurred was the plate tectonics revolution, which overthrew geosynclinal theory (and many other aspects of earth sciences). Arguably, we are entering another paradigm shift in which we may be replacing (or augmenting) the Newtonian mechanistic worldview with Liebnizian metaphysics.

Can scientists be held liable for scientific errors?

Not in a legal sense. But isn't there some kind of cachet for making errors of the worst kind?

For most it is a character trait for most scientists. It is reflected in their diligence and the need to get things right. But there is a downside to this trait which is experienced when the scientist in question has been in error--and especially if that error has been drawn out over a very long time.

Some years ago I was contracted to take some scientific software, which had been designed for a mainframe, and modify it to run on a PC. This was in 1989, and the client had the first 486 machine I had ever seen. It had a tower architecture (a first) and cost over $11,000. The program used a particular algorithm, the Blackman-Tukey algorithm to evaluate a Fourier transform of a time series. The time series was usually a paleoclimatic data set that in the case of my client, was either an isotopic data or on relative abundances of certain species of foraminifera from deep-ocean cores.

The software in question was widely used by Quaternary paleoclimatologists to assess the presence of cyclicity within their time series. In particular, the most were looking for evidence of astronomical forcing in climate change, and the majority of the energy was found in cycles of approximately 100,000 years in length (changes in eccentricity of the earth’s orbit); 40,000 years in length (variations in the earth’s axial tilt) and about 23,000 years in length (precession of the earth’s rotational axis).

Cycles of other lengths can be found as well, some due to nonlinear combination of the above orbital parameters, but also characteristic resonances of dynamic systems within the earth system, and their responses to astronomical forcings.

The software was part of a very large package that is related to SPECMAP. Much of the analysis of SPECMAP still forms the basis of widely available records (including those used by this author). I don’t have an issue with the software used to produce the averaged dated curves of various parameters through the Quaternary. I have an issue with some of the conclusions published in the past.

The Blackman-Tukey algorithm is generally considered to be archaic now. In fact, it was considered to archaic even back in 1989, but it was widely used in part because the confidence level of the results was easily quantified. Also, as a human endeavour, scientists like to do what other scientists are doing—it gives them confidence that they are doing the right thing.

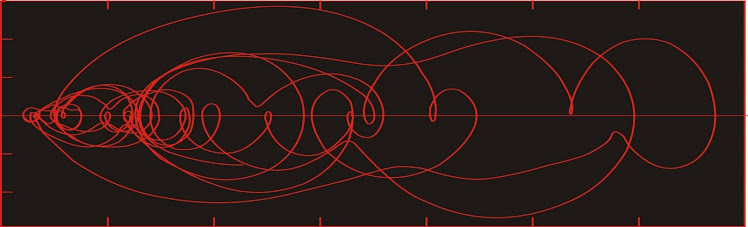

The program made two estimates of the Fourier transform from a given data set—one from a high-resolution subsample of the original data set and one from a low-resolution subsample. To be considered significant, a spectral peak had to be higher by some multiple in the hi-res spectral estimate than it was in the low-res spectral estimate.

In order to implement this, there was a subroutine for drawing the appropriate subsamples, and a second one for designing a “window” to be applied to both subsamples. It was important to taper the ends of a data set before calculating its spectral estimate or else there would be a lot of noise in the power spectrum. And if the two endpoints don’t match, the effect is like adding an impulse to the original data, and the resulting noise has energy at all frequencies.

An effective window tapers the data by reducing the endpoints to zero, but doesn't change the rest of the data very much.

The particular subroutine was one which calculated the coefficients, which would be between zero (at the ends) and one (across the middle). Since there were two subsamples being compared, each consisting a different number of observations, a separate window needed to be calculated for the hi-res and low-res subsamples.

In this implementation, there was an error in the subroutine which calculates the window coefficients—sort of the equivalent of failing to dot an ‘i’ or cross a ‘t’. It was a trivial error in the sense that the program would run. But its output was meaningless.

The programmer forgot to calculate the window coefficients for the low-res subsample, and simply applied the coefficients for the window for the hi-res subsample. Of course, the subroutine runs out of data to taper while still near the middle of the window.

The low-res spectral estimate was calculated from a subsample that was tapered on only one side. Noise was smeared through the entire spectrum. The effect overall was to greatly inflate the apparent significance of spectral peaks in the spectral estimate from the high-resolution subsample.

Considering that the significance of the spectral peaks was the main point of many many academic publications using this software, this was potentially a major issue.

As fate would have it, one of the originators of this software package was coming to my little university to deliver a lecture on something or other (not spectral analysis, but possibly some results using it). So I took the opportunity to ask him afterwards (privately) about the problems outlined above.

Picture the great Shackleton, author of hundreds of papers and the head of this international movement to apply Blackman-Tukey spectral estimates to paleoclimatological data, approached by a young graduate student about some minor technical detail in the SPECMAP series of programs.

I understood from his response immediately that he had no understanding of my point. From the way he flew into a rage, it was clear that reasonable discussion was impossible. He simply shouted, "The software works. The software works!" Clearly he had simply hired some programmer to write the software. His over-reaction was unexpected and left me at a loss.

This is the human condition. He cannot admit to a problem with the software, as it is his name on it, and it is in wide use. If the error had been pointed out before distribution, it would have been a different matter.

Over the next couple of years I engaged a few of the scientists who were using the software, and they couldn't understand the problem either. Everybody was using it, so everyone was confident that it worked. Additionally, the users had no fundamental understanding of the mathematics of the Fourier transform, nor the limitations of its application, which lead to a cavalier approach.

The entire situation was appalling.

The transform was treated like an infallible black box. The data went in one end, and usable results came out. Or so it was assumed.

Are there any changes? The only change is that the black box has changed. Instead of using custom-written software, most of these guys use Matlab or its equivalent. Now I will agree that Matlab is probably better written (and has been through more iterations) than the Pisias/Shackleton software, but there is still an important problem.

The scientists are not fully aware of the mathematics that they are using.

Update (October 25):

To reiterate an earlier point--there comes a time when the emotional involvement with an idea is so strong that one cannot easily discard it even in the face of contradictory evidence. With the SPECMAP story, I have no doubt that had I discovered the flaw at the time of the programs' release, it would have been easier for Shackleton to accept their existence. But at the time of our discussion, the programs had been in common use for several years, and there were many refereed publications which presented conclusions on their basis. By that time the whole SPECMAP thing was like this vast armada sailing across the ocean and it could scarcely notice the lunatic in a lifeboat trying to wave them back with an oar while shouting, "Turn back! Turn back! You're about to sail over the edge!"

It is a human thing, not a scientific thing.

Data into garbage, garbage out?

ReplyDelete